Deploying Axual Governance on OpenShift

Use the following instructions to deploy Axual Governance on OpenShift, so you can onboard your own Kafka cluster using the Self-Service interface.

Prerequisites

To install Axual Governance in your own infrastructure, you need:

-

Credentials for the Axual Registry (https://docker.axual.io)

If you do not have credentials yet, please request them here: https://insights.axual.com/credentials -

An OpenShift cluster (version 4.12 or above) with an Ingress controller

-

Helm (>= version 3)

-

Your favorite terminal

-

For the Kafka cluster you are onboarding:

-

Connectivity information (endpoint, port)

-

Security information (certificates, SASL credentials)

-

Kafka and Schema Registry authentication

Depending on the type of authentication which is enabled on Kafka and Schema Registry, there are additional prerequisites. Check the prerequisites for your type of authentication below.

Kafka authentication

| Mutual TLS (mTLS) | SASL |

|---|---|

If you are using mutual TLS (mTLS) to authenticate to Kafka, there are additional prerequisites:

|

If you are using SASL to authenticate to Kafka, there are additional prerequisites:

|

Schema registry authentication

If you use authentication on the schema registry interface, you need some additional information:

| Basic authentication | TLS |

|---|---|

The username and password to authenticate to Schema Registry |

|

| Axual Governance only supports X.509 certificates in the PEM format and PKCS8 private keys in the PEM format. The extension of certificate and private key can be anything(for example .crt, .pem, .key, .p8 etc). |

Installation procedure

By following the steps below, you will deploy Axual Governance first, after which you will onboard your own Kafka cluster to it and set up resources in Self-Service so that people in your organization can start using the platform.

Step 1: Deploying Axual Governance

-

Log in as

kubeadminto make sure you have the right permissions to perform the installationoc login -u kubeadmin -p <your-password> https://api.crc.testing:6443 -

Create a new OpenShift project

oc new-project axual -

Add the OpenShift helm charts repo to make sure you can install the Axual Governance helm charts

helm repo add openshift-helm-charts https://charts.openshift.io/ helm repo update -

Persist the credentials you have received for the Axual Registry, so they can be used by helm charts during deployment:

kubectl -n axual create secret docker-registry axualdockercred \ --docker-server=docker.axual.io \ --docker-username=[AXUAL_REGISTRY_USERNAME] \ --docker-password=[AXUAL_REGISTRY_PASSWORD] -

Download the example "axual-governance-openshift.values.yaml"

Click to open axual-governance-openshift.values.yaml

global: clusterDomain: "cluster.local" imageRegistry: "docker.axual.io" imagePullSecrets: - name: axualdockercred platform-manager: enabled: true platform-ui: enabled: true api-gateway: enabled: true organization-mgmt: enabled: true topic-browse: enabled: true api-gateway: config: gateway: endpoints: platformManager: enabled: true url: "http://axual-governance-platform-manager" organizationManager: enabled: true url: "http://axual-governance-organization-mgmt" topicBrowse: enabled: true url: "http://axual-governance-topic-browse" billing: enabled: false metricsExposer: enabled: false platformUi: enabled: true url: "http://axual-governance-platform-ui" keycloak: enabled: true url: "http://keycloak:8080" topic-browse-config-api: url: "http://axual-governance-platform-manager/api/stream_configs/{id}/browse-config" permissions-api: url: "http://axual-governance-platform-manager/api/auth" local: auth: issuerUrlForValidation: https://axual-governance.apps-crc.testing/auth/realms/local jwkSetUri: http://keycloak:8080/auth/realms/local/protocol/openid-connect/certs sso: keycloak: advertisedBaseUrl: https://axual-governance.apps-crc.testing internalBaseUrl: http://keycloak:8080 useInsecureTrustManager: true logging: filter: enabled: true route: enabled: true annotations: {} labels: {} host: "axual-governance.apps-crc.testing" path: "/" tls: termination: "edge" platform-manager: debug: enabled: false image: pullPolicy: "Always" mtls: enabled: false config: spring: datasource: name: "fluxdb" url: "jdbc:mysql://axual-platform-manager-mysql:3306/selfservicedb?useSSL=false&useLegacyDatetimeCode=false&serverTimezone=UTC" username: "fluxmaster" password: "Passw0rd" driver-class-name: "com.mysql.cj.jdbc.Driver" jpa.database-platform: "org.hibernate.dialect.MySQLDialect" flyway: locations: "classpath:db/migration/mysql" axual: api.available.auth.methods: "SSL, SCRAM_SHA_512" instance-api: available: false operation-manager: available: false connect: available: false client: socket-timeout: 60000 security: header-based-auth: true # Disable Keycloak and rely on headers passed from API Gateway governance: vault: enabled: false uri: "http://vault:8200" path: "governance" # Required: change to your roleId for PlatformManager, see step 9 above roleId: "744062d7-1d86-3496-bfdc-76f4d7352a2e" # Required: change to your secretId for PlatformManager, see step 9 above secretId: "634799b5-3ac7-ff6e-071f-a620243318fe" # Vault Configuration for Connectors vault: enabled: false azure: keyVault: enabled: false subscription-management: enabled: false server: ssl: enabled: false forward-headers-strategy: framework platform-ui: platformManager: fqdn: "axual-governance.apps-crc.testing" # Window.ENV Configuration config: docsVersion: "2023.3" mgmtApiUrl: "https://axual-governance.apps-crc.testing/api" mgmtUiUrl: "https://axual-governance.apps-crc.testing/" organizationManagerUrl: "https://axual-governance.apps-crc.testing/api/organizations" topicBrowseUrl: 'https://axual-governance.apps-crc.testing/api/stream_configs' stripeBillingManagementUrl: "https://billing.stripe.com/p/login/test_00g15x1UV4hKcI8eUU" # Feature Flags billingEnabled: false insightsEnabled: false dataClassificationEnabled: false connectEnabled: false clientId: "self-service" clientSecret: "notSecret" configurationType: "remote" # Stream Browsing streamBrowseEnabled: false # This is for deciding on SB/CB(false) or TB(true) topicBrowseEnabled: true # Hide multiple environments singleEnvironmentEnabled: true organizationShortNameEditEnabled: true # Stripe Subscription subscriptionEnabled: false # Keycloak Configuration keycloakEnabled: true oidcScopes: "openid profile email" responseTypes: "code" oidcEndpoint: "https://axual-governance.apps-crc.testing" # Wizard Allowed Providers enabledKafkaProviders: - aiven - confluent_cloud - apache_kafka organization-mgmt: config: authStrategy: "keycloak" keycloakDomain: "http://keycloak:8080" keycloakCLIUsername: "admin" keycloakCLIPassword: "admin" tlsVerification: falseThe provided "axual-governance-openshift.values.yaml" assumes you use a local installation of OpenShift to verify the installation. If your OpenShift cluster has a different base URL, be sure to replace the occurrences of

https://axual-governance.apps-crc.testingwith the URL which applies to your situation. -

Deploy the MySQL persistent database for Platform Manager.

oc new-app --template=mysql-persistent \ --param DATABASE_SERVICE_NAME=axual-platform-manager-mysql \ --param=MYSQL_DATABASE=selfservicedb \ --param=MYSQL_USER=fluxmaster \ --param=MYSQL_PASSWORD=Passw0rd \ --param=MYSQL_ROOT_PASSWORD=rootpassword----If you decide to customize the passwords, be sure to update them in "axual-governance-openshift.values.yaml" -

Download the example "keycloak.yaml" which is needed to install and configure Keycloak

Click to open keycloak.yaml

kind: Template apiVersion: template.openshift.io/v1 metadata: name: keycloak annotations: description: An example template for trying out Keycloak on OpenShift iconClass: icon-sso openshift.io/display-name: Keycloak tags: keycloak version: 23.0.3 objects: - apiVersion: v1 kind: Service metadata: annotations: description: The web server's http port. labels: application: '${APPLICATION_NAME}' name: '${APPLICATION_NAME}' spec: ports: - port: 8080 targetPort: 8080 selector: deploymentConfig: '${APPLICATION_NAME}' - apiVersion: v1 id: '${APPLICATION_NAME}' kind: Route metadata: annotations: description: Route for application's service. labels: application: '${APPLICATION_NAME}' name: '${APPLICATION_NAME}' spec: host: '${HOSTNAME}' tls: termination: edge to: name: '${APPLICATION_NAME}' - apiVersion: v1 kind: DeploymentConfig metadata: labels: application: '${APPLICATION_NAME}' name: '${APPLICATION_NAME}' spec: replicas: 1 selector: deploymentConfig: '${APPLICATION_NAME}' strategy: type: Recreate template: metadata: labels: application: '${APPLICATION_NAME}' deploymentConfig: '${APPLICATION_NAME}' name: '${APPLICATION_NAME}' spec: containers: - env: - name: KEYCLOAK_ADMIN value: '${KEYCLOAK_ADMIN}' - name: KEYCLOAK_ADMIN_PASSWORD value: '${KEYCLOAK_ADMIN_PASSWORD}' - name: KC_PROXY value: 'edge' - name: KC_HTTP_RELATIVE_PATH value: '/auth' image: quay.io/keycloak/keycloak:23.0.3 livenessProbe: failureThreshold: 100 httpGet: path: /auth port: 8080 scheme: HTTP initialDelaySeconds: 60 name: '${APPLICATION_NAME}' ports: - containerPort: 8080 protocol: TCP readinessProbe: failureThreshold: 300 httpGet: path: /auth port: 8080 scheme: HTTP initialDelaySeconds: 30 securityContext: privileged: false volumeMounts: - mountPath: /opt/keycloak/data name: empty args: ["start-dev"] volumes: - name: empty emptyDir: {} triggers: - type: ConfigChange parameters: - name: APPLICATION_NAME displayName: Application Name description: The name for the application. value: keycloak required: true - name: KEYCLOAK_ADMIN displayName: Keycloak Administrator Username description: Keycloak Server administrator username generate: expression from: '[a-zA-Z0-9]{8}' required: true - name: KEYCLOAK_ADMIN_PASSWORD displayName: Keycloak Administrator Password description: Keycloak Server administrator password generate: expression from: '[a-zA-Z0-9]{8}' required: true - name: HOSTNAME displayName: Custom Route Hostname description: >- Custom hostname for the service route. Leave blank for default hostname, e.g.: <application-name>-<namespace>.<default-domain-suffix> - name: NAMESPACE displayName: Namespace used for DNS discovery description: >- This namespace is a part of DNS query sent to Kubernetes API. This query allows the DNS_PING protocol to extract cluster members. This parameter might be removed once https://issues.jboss.org/browse/JGRP-2292 is implemented. required: true -

Deploy Keycloak using the "keycloak.yaml" you just downloaded

oc process -f keycloak.yaml \ -p KEYCLOAK_ADMIN=admin \ -p KEYCLOAK_ADMIN_PASSWORD=admin \ -p NAMESPACE=axual \ | oc create -f - -

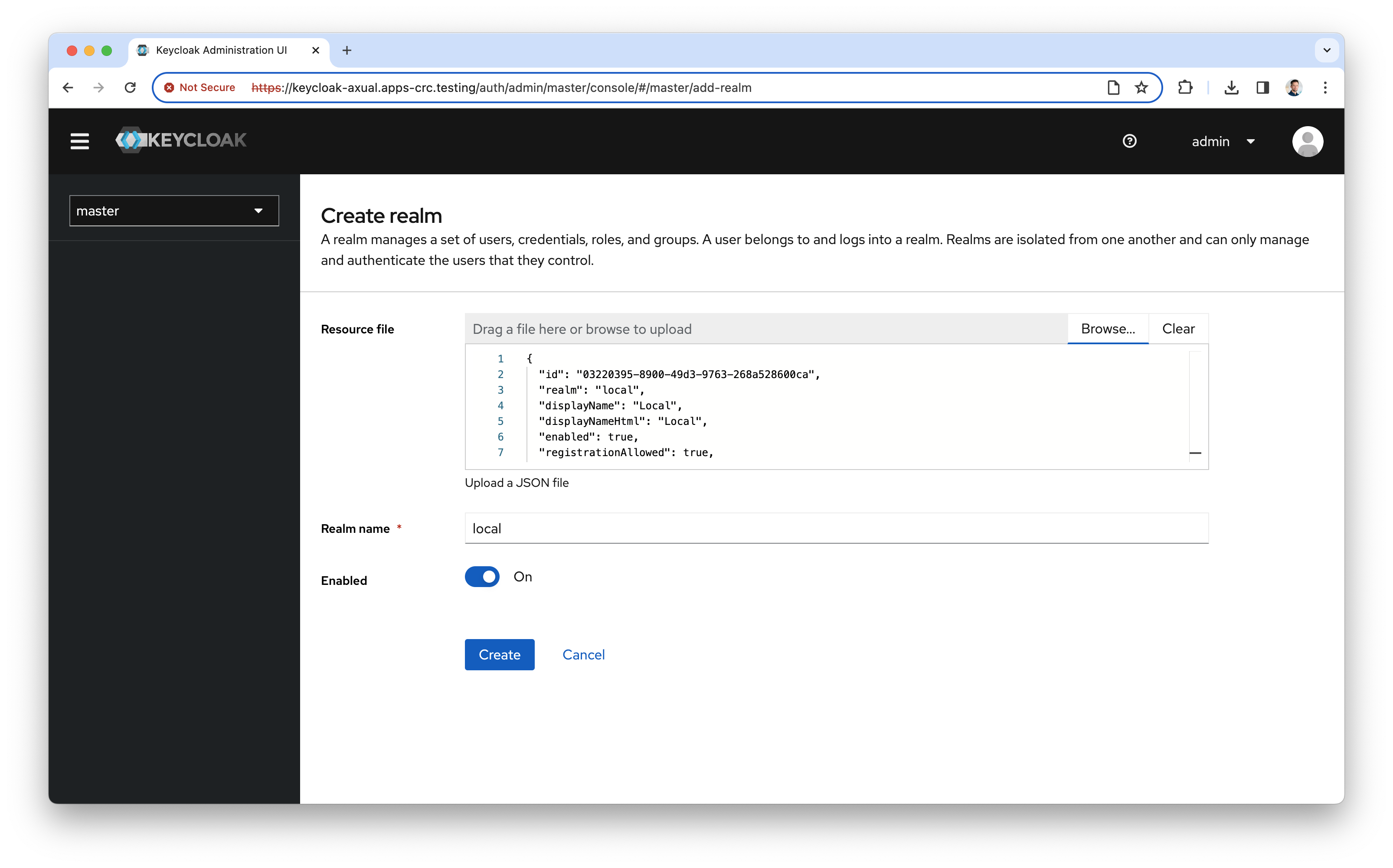

Configure a local realm for Axual Governance. First download the "keycloak-local-realm.json" file below.

Click to open keycloak-local-realm.json

{ "id": "03220395-8900-49d3-9763-268a528600ca", "realm": "local", "displayName": "Local", "displayNameHtml": "Local", "enabled": true, "registrationAllowed": true, "clients": [ { "id": "3d03c347-f6cc-451b-9671-fce8b7ca3bfe", "clientId": "self-service", "name": "Self Service Client", "description": "Client used by the Self-Service to authenticate users", "rootUrl": "https://axual-governance.apps-crc.testing", "adminUrl": "https://axual-governance.apps-crc.testing", "baseUrl": "", "surrogateAuthRequired": false, "enabled": true, "alwaysDisplayInConsole": false, "clientAuthenticatorType": "client-secret", "redirectUris": [ "*" ], "webOrigins": [ "*" ], "notBefore": 0, "bearerOnly": false, "consentRequired": false, "standardFlowEnabled": true, "implicitFlowEnabled": false, "directAccessGrantsEnabled": true, "serviceAccountsEnabled": false, "publicClient": true, "frontchannelLogout": true, "protocol": "openid-connect", "attributes": { "oidc.ciba.grant.enabled": "false", "post.logout.redirect.uris": "*", "oauth2.device.authorization.grant.enabled": "false", "backchannel.logout.session.required": "true", "backchannel.logout.revoke.offline.tokens": "false" }, "authenticationFlowBindingOverrides": {}, "fullScopeAllowed": true, "nodeReRegistrationTimeout": -1, "protocolMappers": [ { "id": "e2f65234-0851-4c56-aef8-e570571903cc", "name": "TenantShortName Mapper", "protocol": "openid-connect", "protocolMapper": "oidc-usermodel-attribute-mapper", "consentRequired": false, "config": { "introspection.token.claim": "true", "userinfo.token.claim": "true", "user.attribute": "tenant-short-name", "id.token.claim": "true", "access.token.claim": "true", "claim.name": "tenant_short_name", "jsonType.label": "String" } }, { "id": "9c6f38e8-483f-4f2f-bd15-19dc5462e77d", "name": "TenantName Mapper", "protocol": "openid-connect", "protocolMapper": "oidc-usermodel-attribute-mapper", "consentRequired": false, "config": { "introspection.token.claim": "true", "userinfo.token.claim": "true", "user.attribute": "tenant-name", "id.token.claim": "true", "access.token.claim": "true", "claim.name": "tenant_name", "jsonType.label": "String" } } ] } ] } -

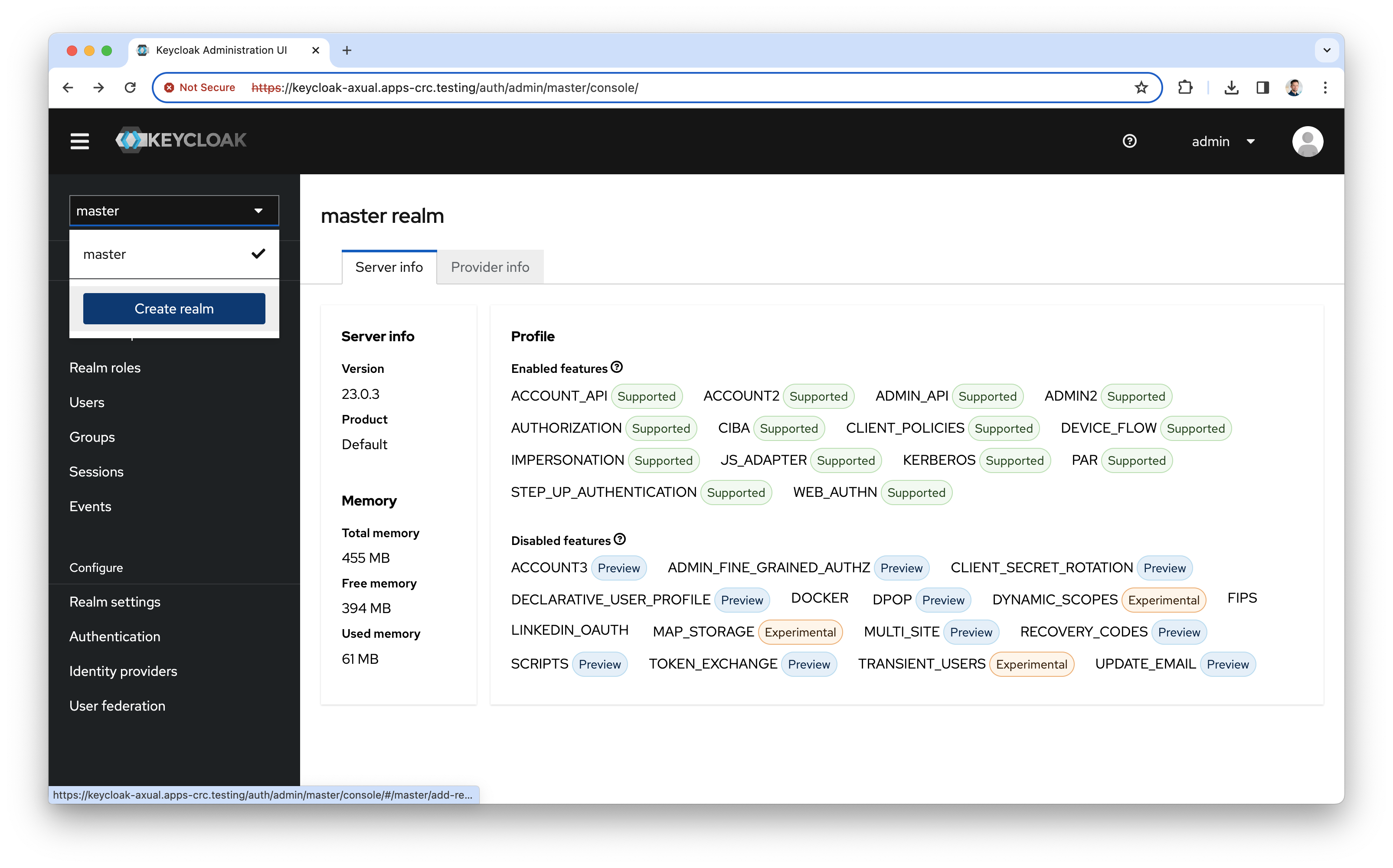

Open the keycloak admin interface via https://keycloak-axual.apps-crc.testing/auth/admin/master/console/. Use the credentials from the previous step. The following screen will show:

-

Click the dropdown and the "Create realm" button

-

Browse for the "keycloak-local-realm.json" file and import it by clicking the "Browse" button

-

Click "Create" to create the local realm.

-

Deploy HashiCorp Vault

helm upgrade --install vault openshift-helm-charts/hashicorp-vault -

Wait for the pod

vault-0to be in a ready state, before you continue with the next steps. -

Install Axual Governance

helm install axual-governance axual-stable/axual-governance --version 0.5.0 -f ./axual-governance-openshift.values.yaml -n axual

-

Axual Governance needs HashiCorp Vault to securely store the credentials of the Kafka cluster you are onboarding. the following steps will make the installation of Vault a smooth experience.

-

First, create an alias for the Vault CLI which will be reused later and initialize Vault

alias v='kubectl -n axual exec --stdin=true vault-0 -- ' v vault operator init -key-shares=1 -key-threshold=1Keep the values for Unseal Key and Root Token in a safe place, you will need them later -

Log in to Vault using the Unseal key and Root Token

v vault operator unseal [UNSEAL_KEY] v vault login [ROOT_TOKEN] -

Prepare Vault to be used by the platform. Copy the commands below:

v vault secrets enable -path=governance kv-v2 v vault auth enable approle echo 'path "governance/*" {capabilities = ["read","create","update","delete"]}' | v vault policy write platform-manager - v vault write auth/approle/role/platform-manager token_policies="platform-manager" v vault read auth/approle/role/platform-manager/role-id v vault write -force auth/approle/role/platform-manager/secret-idFind the

role_idandsecret_idin the output of the command above and store them in a safe place. -

Update the configuration for

platform-managerin "axual-governance-openshift.values.yaml" to use therole_idandsecret_idgenerated above and setvault.enabledto trueplatform-manager: governance: vault: enabled: true uri: "http://axual-governance-platform-manager-vault:8200" path: "governance" roleId: "[ROLE_ID]" # Insert the value of `role_id` here (between double quotes) secretId: "[SECRET_ID]" # Insert the value of `secret_id` here (between double quotes) -

Apply the changes to the "axual-governance-openshift.values.yaml" file

helm upgrade --install axual-governance axual-stable/axual-governance --version 0.5.0 -f ./axual-governance-openshift.values.yaml -n axual

-

-

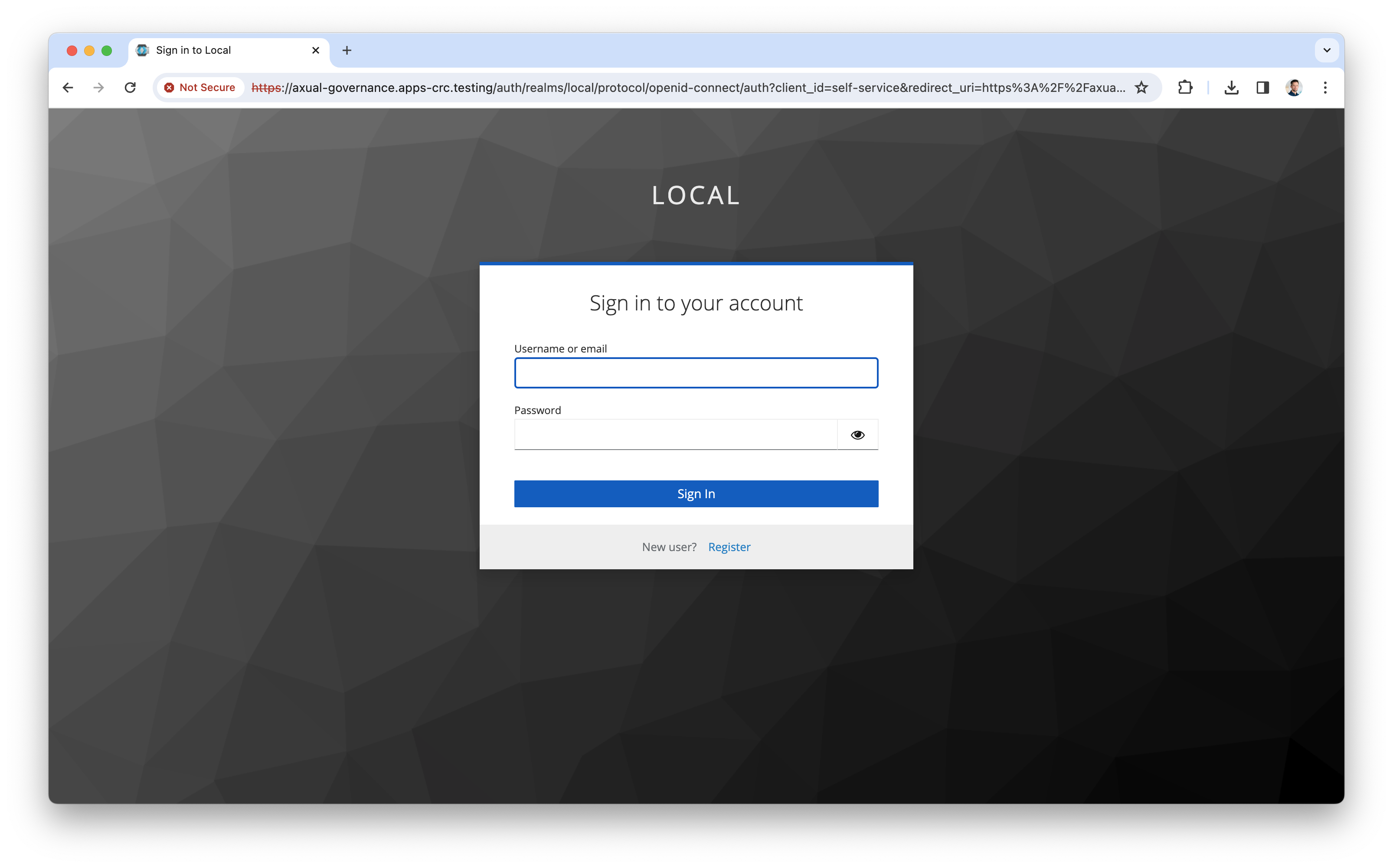

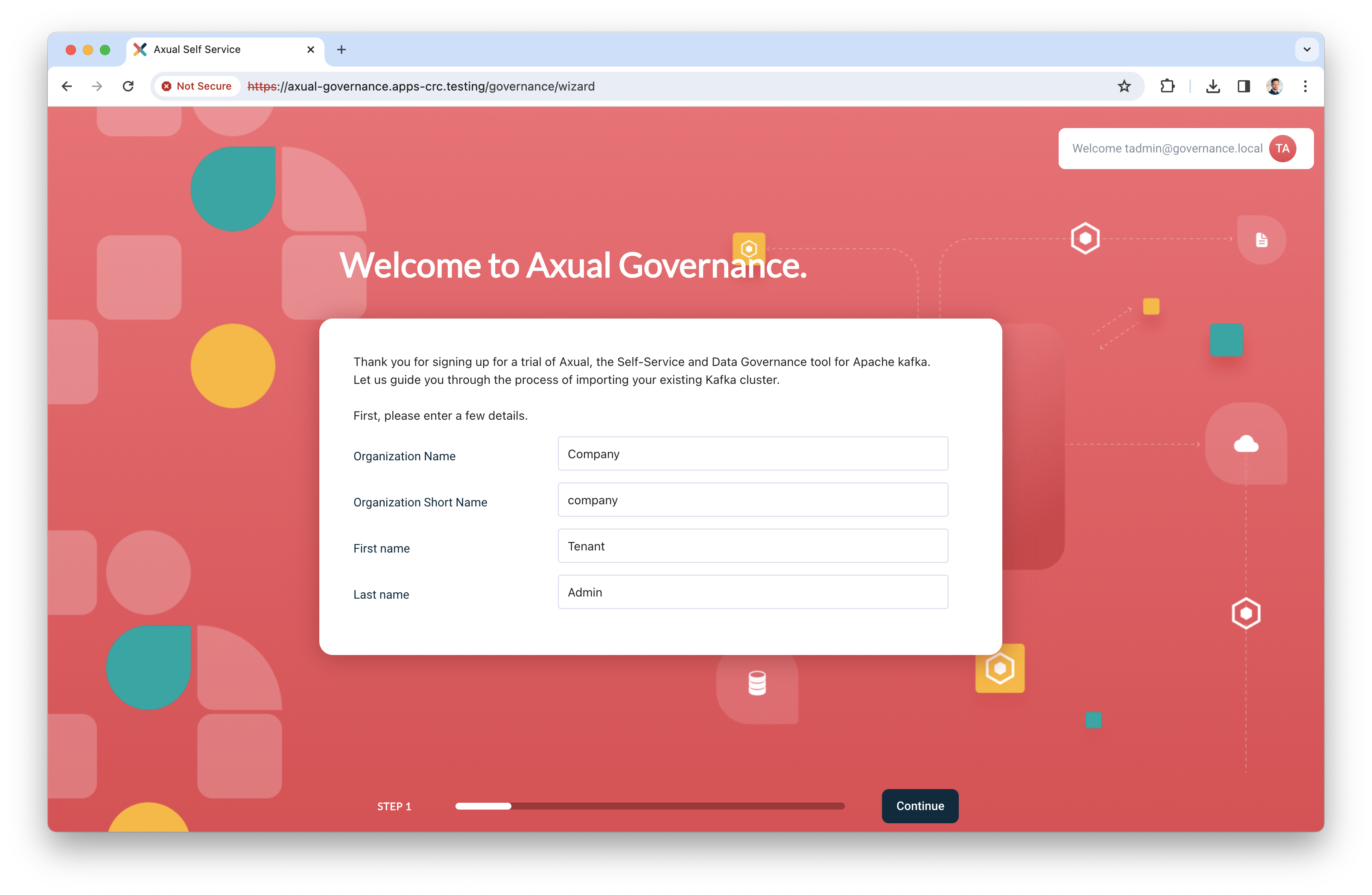

Finally, log into the Self-Service interface via the following URL: https://axual-governance.apps-crc.testing/. The following screen will be shown:

-

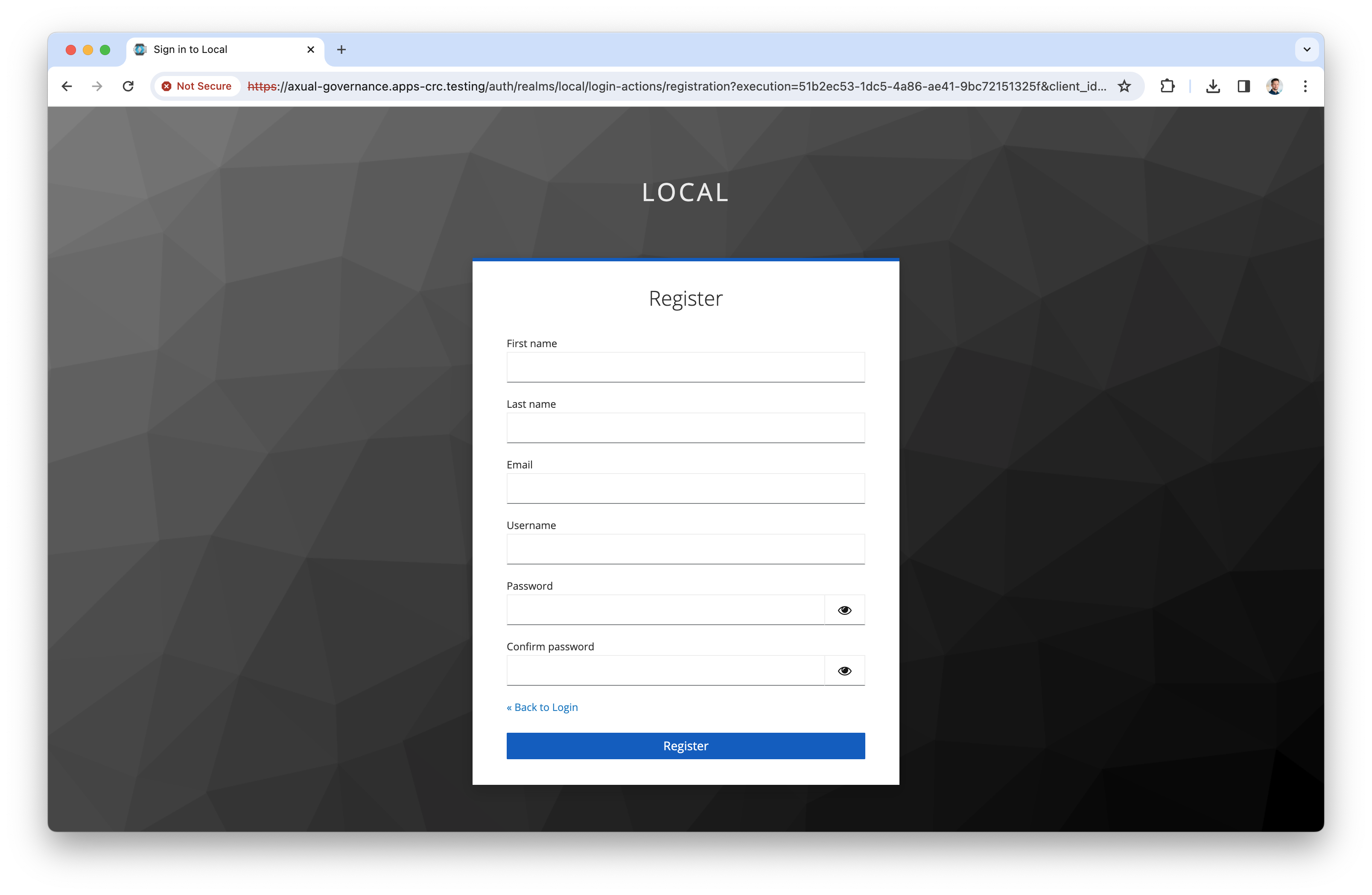

Click "Register user" to register a tenant admin user. This user has administrative privileges on the platform. The following screen will be shown:

-

Register the tenant admin on your platform. Enter details for the following fields

-

First name

-

Last name

-

Email

-

Username → you will use this to log in next time

-

Password

-

Confirm password

-

-

Click "Register" to add the user, the following screen will be shown:

-

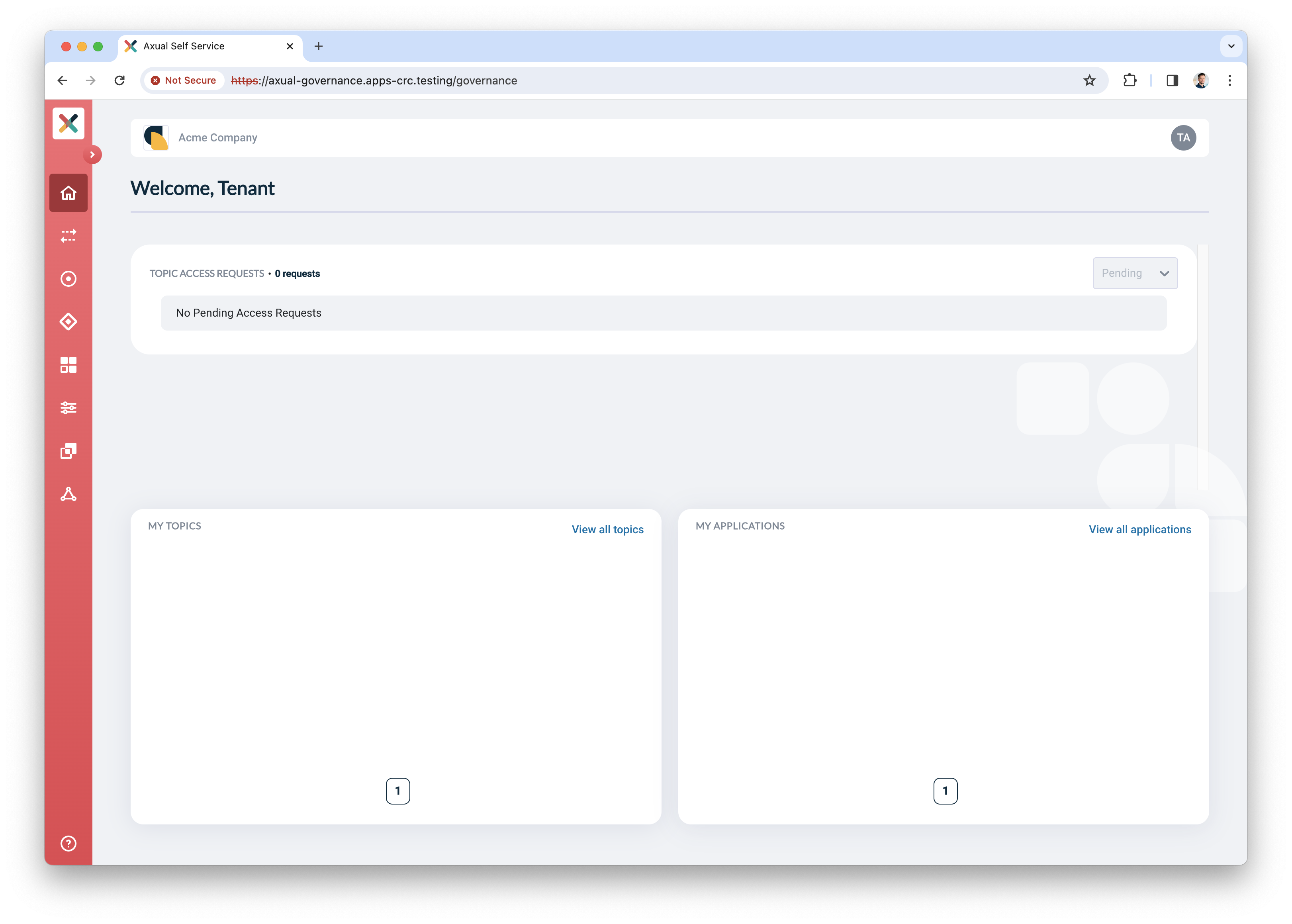

Add the details of your organization and click "Continue". You will be redirected to the dashboard, which looks like this:

By completing the steps above, you have deployed Axual Governance and prepared Self-Service to log in as a tenant admin.

You can now continue with Step 2: Onboarding your own Kafka cluster

Step 2: Onboarding your own Kafka cluster

In this step, you are going to onboard your own Kafka cluster in Axual Governance, so you can create and configure topics and authorize applications in a self-service fashion.

| This onboarding procedure only supports onboarding an existing Kafka cluster to be used for new topics and ACLs. |

|

Only continue the onboarding when the following prerequisites are met:

|

Onboarding the cluster

-

Log in to Self-Service using the tenant admin credentials

-

Expand the menu to see all items.

-

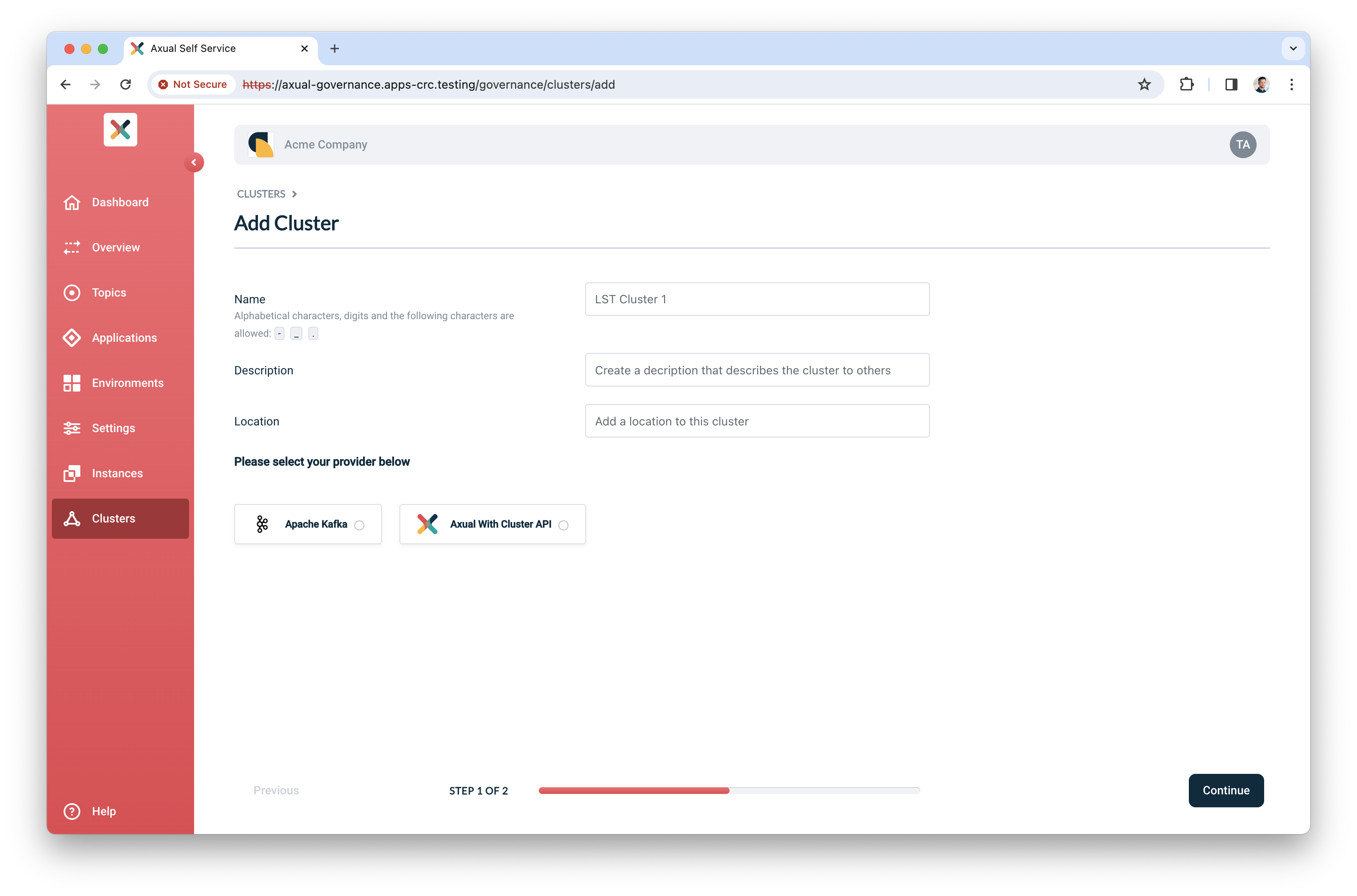

Click "Clusters", followed by "Add cluster". The following screen will show:

-

Fill in the following information for your cluster:

-

Name: use a name to refer to your cluster

-

Description: provide a description for this cluster (e.g. dev)

-

Location: additional metadata which helps you to recognize it

-

-

Select "Apache Kafka" as the provider.

-

Click "Continue".

-

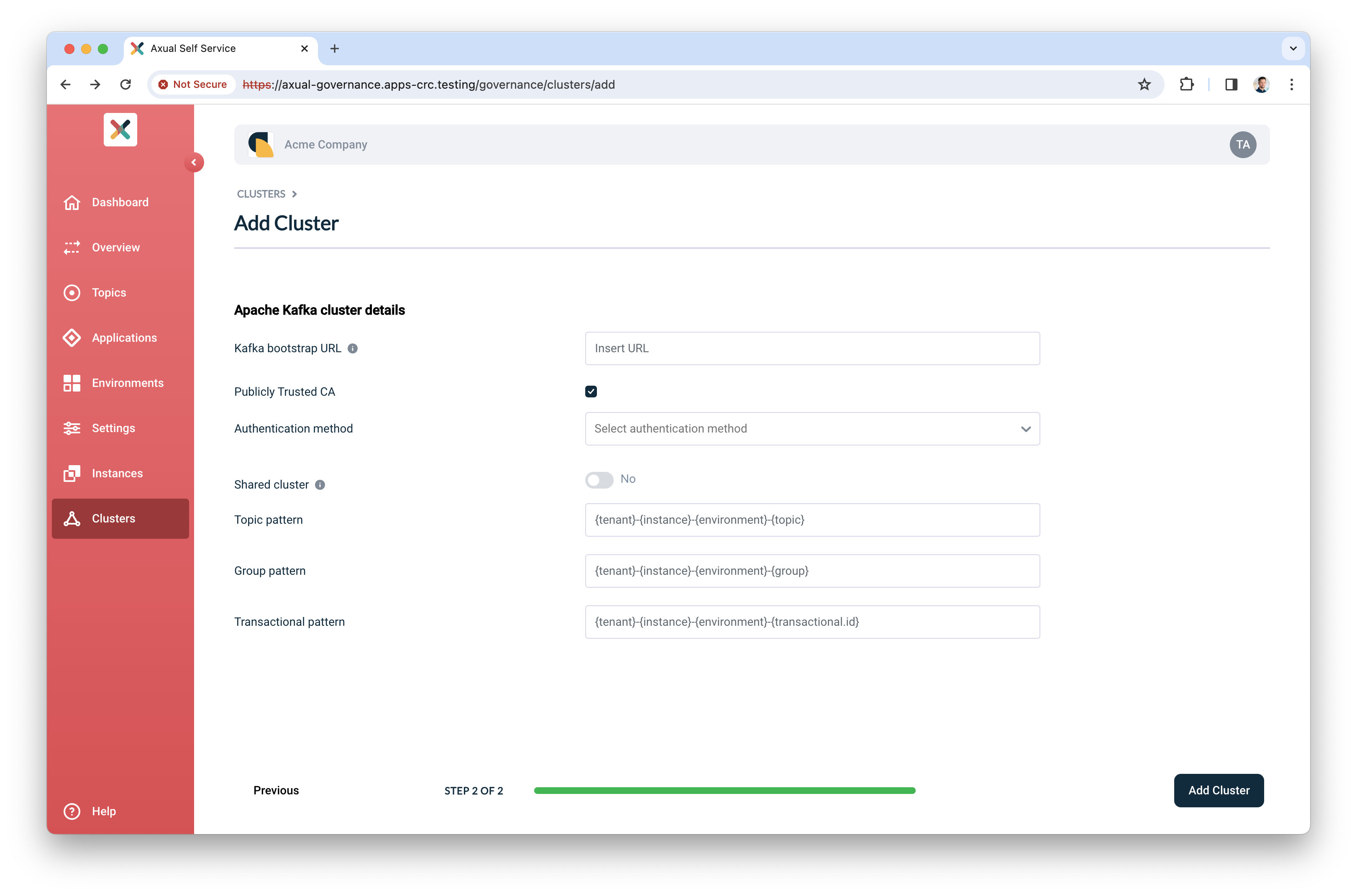

Provide the following information:

-

Kafka bootstrap URL: use the URL and port on which Platform Manager can reach the brokers

-

Publicly Trusted CA: select this if the CA of the brokers is publicly trusted OR provide the CA (PEM)

-

CA (PEM): The PEM file of the CA certificate which is used to sign the broker’s certificate

-

-

Choose your authentication method.

-

For TLS, provide the Certificate (PEM) and associated Private Key which Platform Manager uses to authenticate to the brokers

-

For SASL:

-

Select the SASL mechanism (

PLAIN,SCRAM_SHA_256andSCRAM_SHA_512are supported) -

Provide the Username and Password

-

-

-

Click Verify to see whether the provided details are working. Continue when the verify action returns "Broker connection verified"

-

Leave "Shared cluster" as is

-

Use the following patterns for multi environment support (see Topic, Consumer Group and Transactional ID Patterns for more information)

-

Topic:

{instance}-{environment}-{topic} -

Group:

{instance}-{environment}-{application.id} -

Transactional:

{instance}-{environment}-{application.id}-{transactional.id}

-

-

Finally, click "Add cluster" to persist all information

Preparing Self-Service

Topics and Applications exist in Environments. In the following steps, you will create an Instance associated with the cluster you just created. Next, you will assign a new environment to this new instance. It is good to know that in the future, you can decide to add more environments to this instance.

Creating an instance

-

In the menu click "Instances", followed by "New instance"

-

Provide the following information:

-

Name: a name to describe your instance (e.g. DTA for Dev, Test, Acceptance or Production)

-

Short name: a short name for your instance. Depending on the topic pattern, this name might end up in your topic name

-

Description: add your own description for this instance.

-

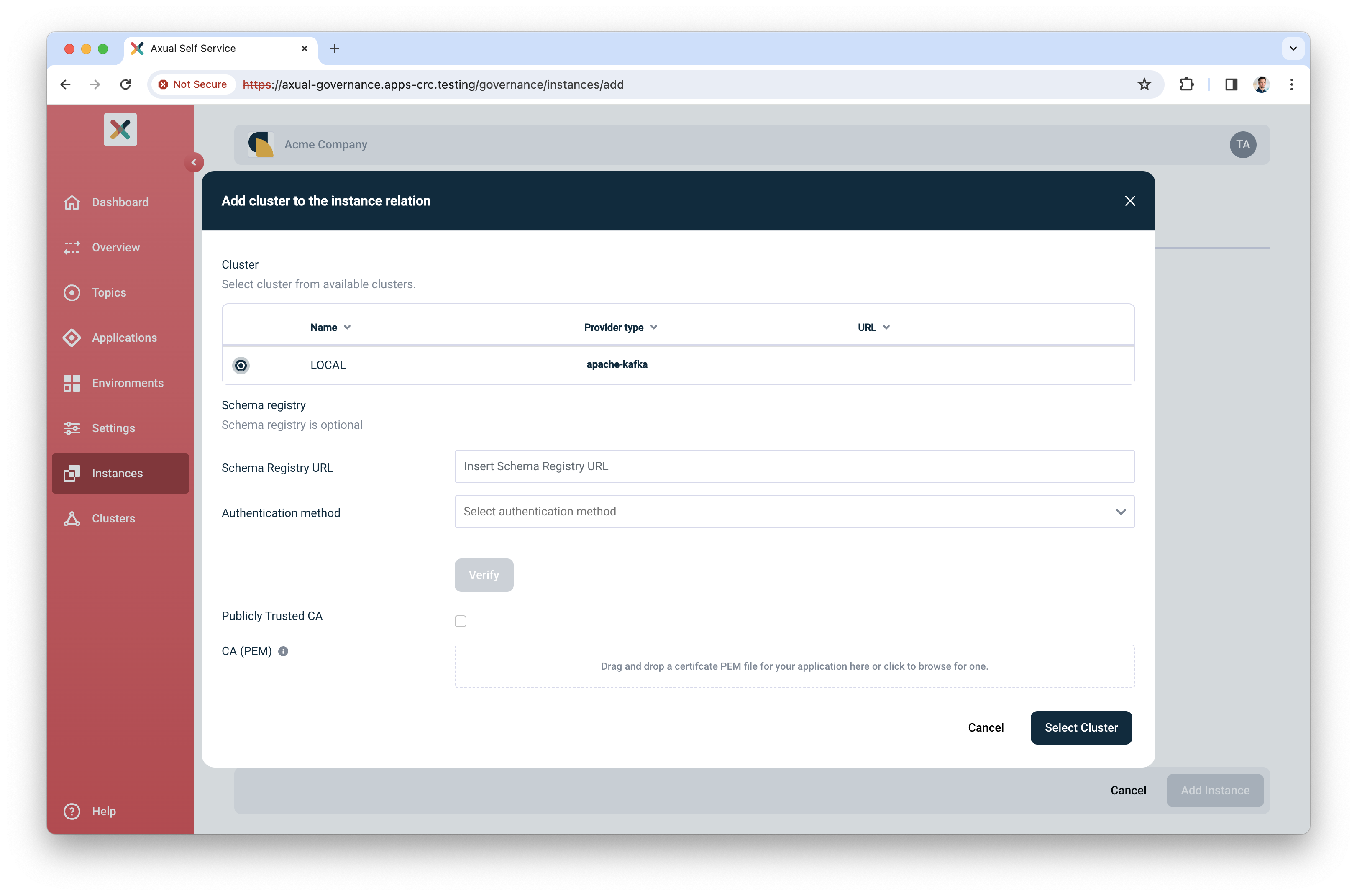

Clusters: click "Select cluster", the following screen will show

-

Select the cluster you created before

-

Enter the Schema registry URL

-

Select the Authentication method for Schema Registry (No authentication, Basic authentication and TLS are supported)

-

Click "Verify" to check whether the URL and connection details are working

-

Check Publicly trusted CA whenever the certificate of Schema Registry is issued by a publicly trusted CA OR upload the CA used to sign Schema Registry’s certificate.

-

Click "Select cluster" to assign the cluster to the instance

-

-

-

Enable “Environment mapping” to allow anyone with the ENVIRONMENT_AUTHOR role to create, update and edit environments.

-

Leave “Properties” as is.

-

Click “Add Instance” to add the instance.

Creating an environment

-

In the menu click "Environments", followed by "New environment"

-

Provide the following information:

-

Name: a name to describe your environment (e.g. "Development" or "Acceptance")

-

Short name: a short name for your environment. Depending on the topic pattern, this name might end up in your topic name

-

Description: Describe the environment

-

Color: select a color which helps users to identify the environment quickly throughout Self-Service

-

Instance: select the instance you created in the previous step

-

Visibility:

-

Select "Private" if this environment should only visible by the owner

-

Select "Public" if this environment should be visible by all users

-

-

Authorization issuer: determine who is responsible for authorizing topic access requests:

-

Select "Auto" if access can be granted automatically (e.g. in Test environments)

-

Select "Stream owner" if access needs to be granted by the topic owner

-

-

Owner: click "Select cluster", the following screen will show

-

-

Leave “Properties” as is.

-

Click "Add Environment" to add the environment

🎉 Congratulations! you have now concluded the first time setup of Self-Service for topic management. Before you invite anyone in the organization to start using it, complete Step 3: Functional verification.

Step 3: Functional verification

In this step, you are going to:

-

create a topic using the Self-Service interface

-

authorize an application to produce data to it

-

produce messages to the topic you created

-

verify the messages have arrived on the topic

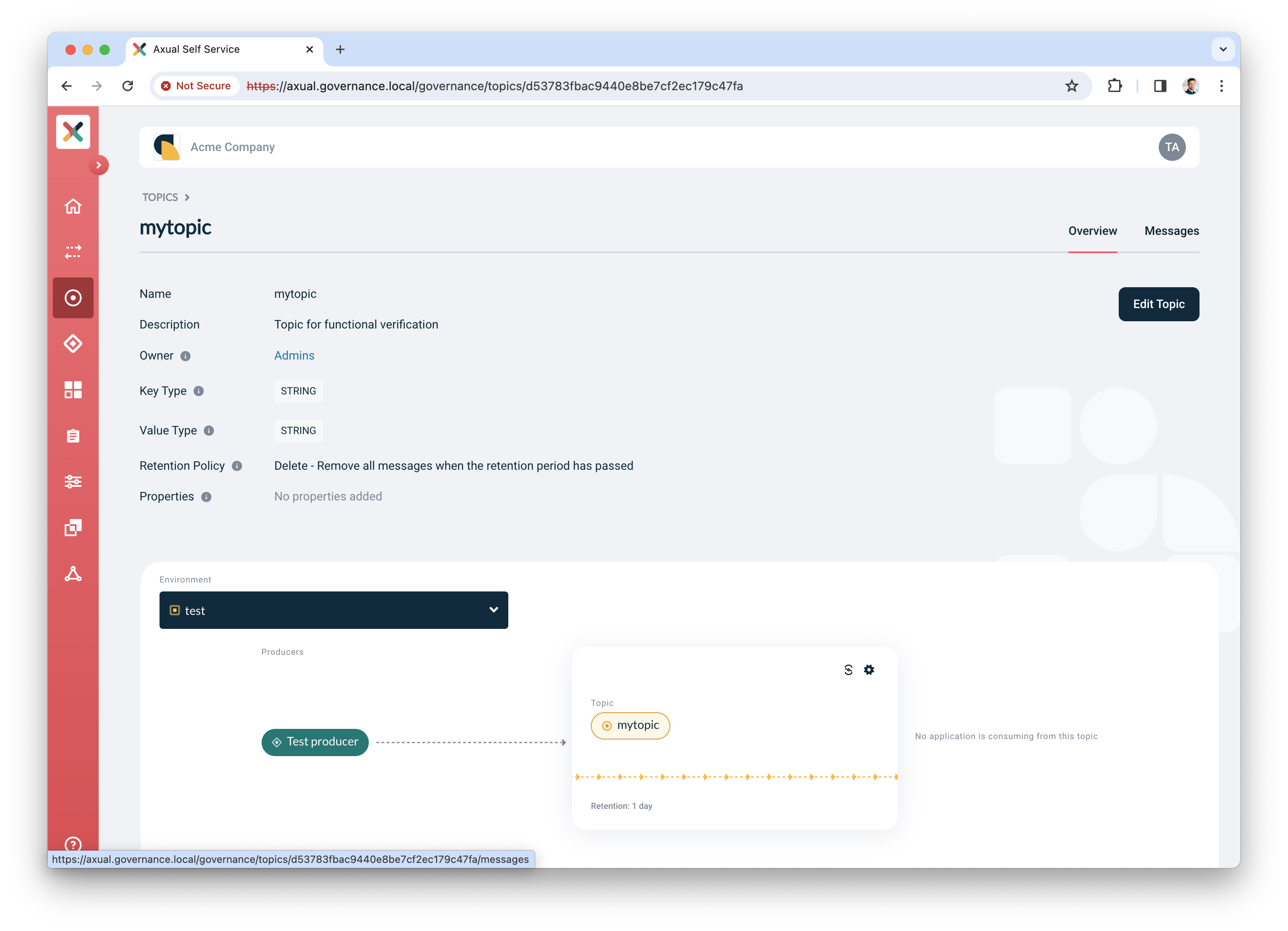

Creating and configuring a topic

-

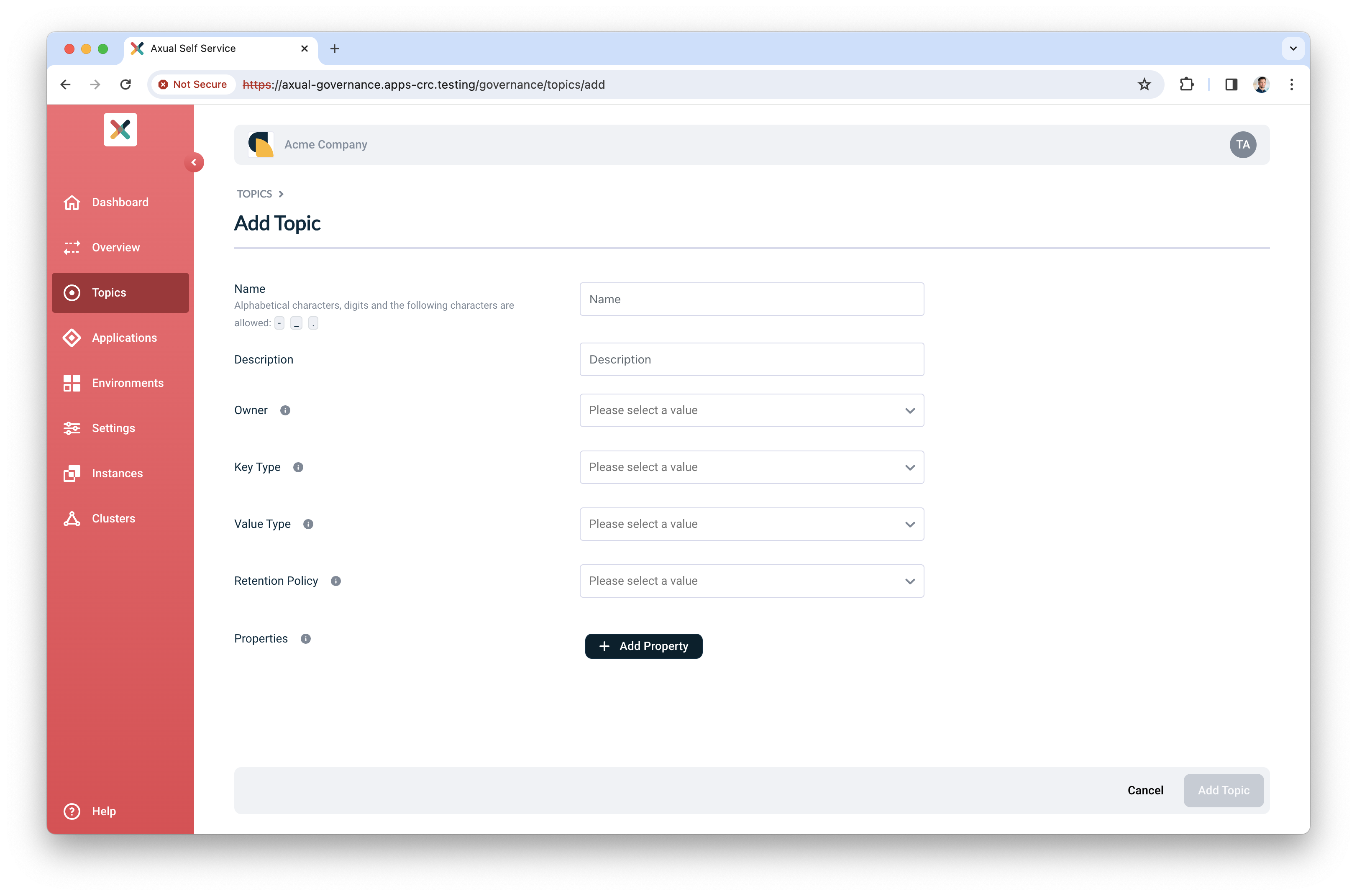

In the menu, click "Topics", followed by "New topic"

-

Provide the following details for the topic:

-

Name: use "mytopic" as the name

-

Description: use something like "Topic for functional verification"

-

Owner: select the team you are member of, "Admins"

-

Key Type: to keep things simple, use "String" as the key type

-

Value Type: to keep things simple, use "String" as the value type

-

Retention Policy: select "Delete"

-

-

Leave "Properties" as is

-

Click "Add Topic" to add the topic

-

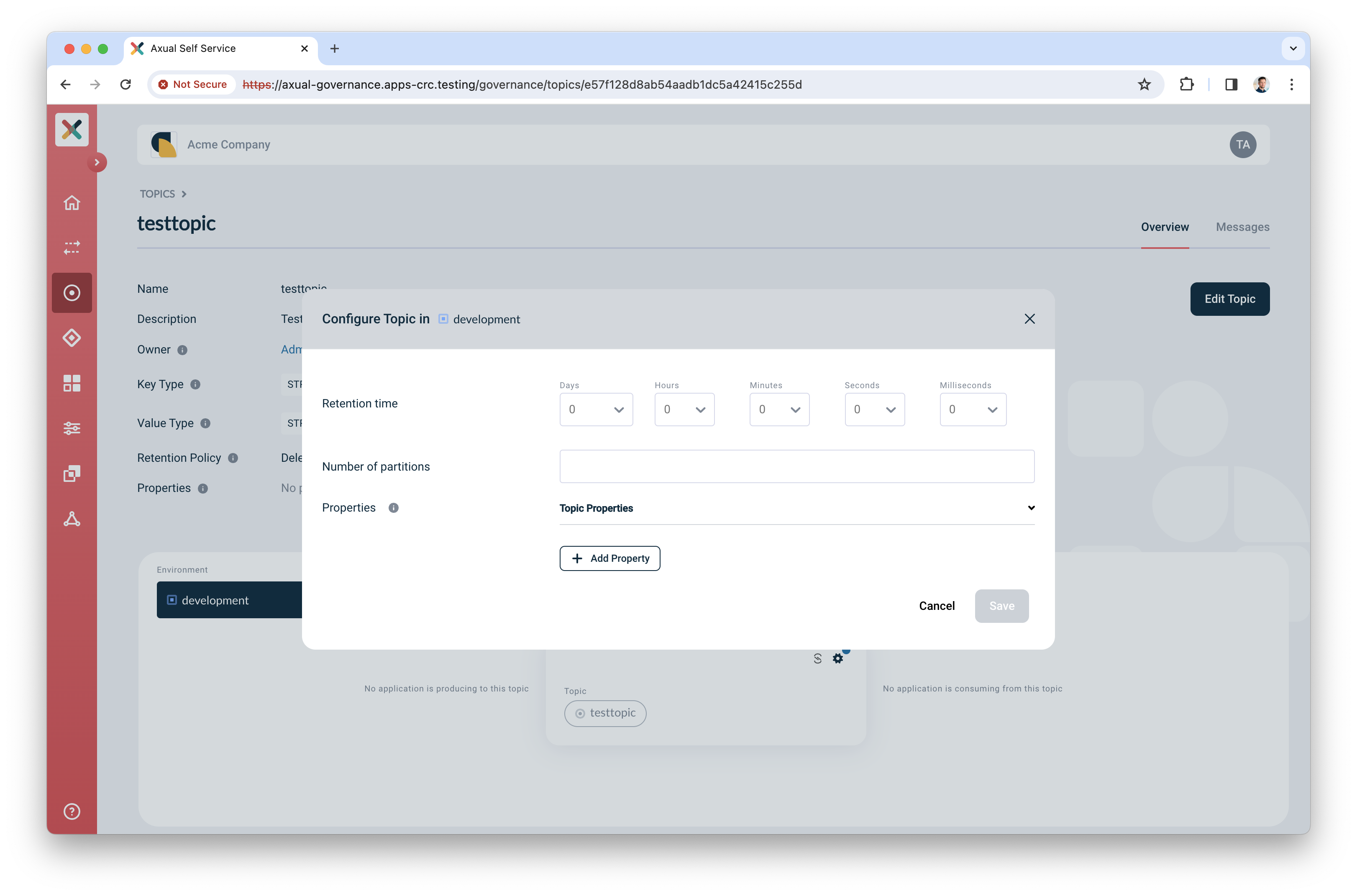

Next, configure the topic, so it is created in the Kafka cluster with appropriate settings. Click the "Configure topic" icon to open the modal with configuration options.

-

In the window that opens, define the following details:

-

Retention time: choose any retention time for this test topic, e.g. "1 day"

-

Number of partitions: use "4"

-

-

Leave "Properties" as is".

-

Click "Save" to create the topic on the Kafka cluster.

Creating and authorizing the application

Next, you will create an application and authorize it to produce to the topic.

-

In the menu, click "Applications", followed by "New application"

-

Provide the following details for the application:

-

ID: use "io.testing.producer"

-

Name: use "Test producer"

-

Short name: use "test_producer"

-

Owner: select "Admins"

-

Application type: select "Custom", followed by "Java"

-

Visibility: select "Public"

-

Description: use "Simple producer app"

-

-

Click "Add application" to add the application

-

Select the environment you created before as part of Preparing Self-Service

-

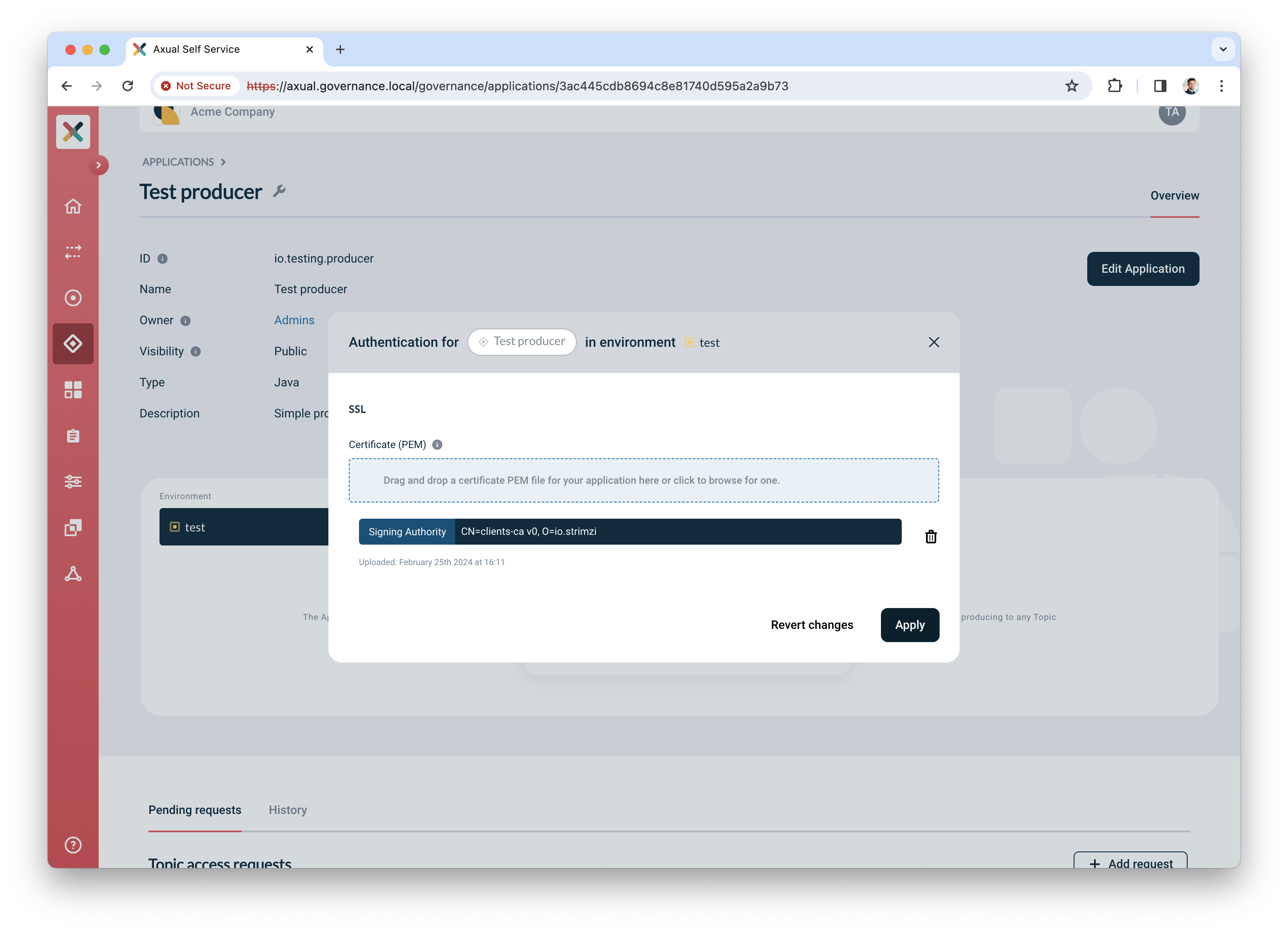

Click the "Authentication" icon to add credentials for the producer app

-

Upload a certificate (PEM) for an application which you want to authorize to produce.

Make sure the application certificate is signed by a Certificate Authority (CA) which is trusted by the broker

-

Click "Add request" to request produce access on your topic

-

Select "Producer" for produce access

-

Select topic "mytopic"

-

Click "Request approval" to make sure the app with the uploaded certificate can produce

Your app is ready and authorized to produce. Complete your verification by producing some data on the topic.

Producing some data

We are going to use the tool kcat to produce some data to the topic.

-

Install

kcaton your machine. Click here for installation instructions. -

Create a file named "kcat.conf" with the following contents

# Bootstrap server URL and port bootstrap.servers=bootstap.servers.url:port security.protocol=SSL # For ssl.key.location and ssl.certificate.location, use the private key and certificate of the app (PEM) # It is the same certificate you uploaded in step 6. "Creating and authorizing the application" above ssl.key.location=clients-ca.key ssl.certificate.location=clients-ca.crt # For ssl.ca.location, use the certificate of the CA which signed the broker's certificate ssl.ca.location=cluster-ca.crt -

Produce some messages to the topic with the following command:

echo "Test message contents" | kcat -F kcat.conf -P -t loc-test-mytopic -k "Test key"Be sure to use the topic name which respects the pattern. In the example above you can see that you are producing a message to topic mytopicin environmenttest, instanceloc -

If you managed to produce messages successfully, you will not see any errors. The final verification is done in Self-Service in the next paragraph.

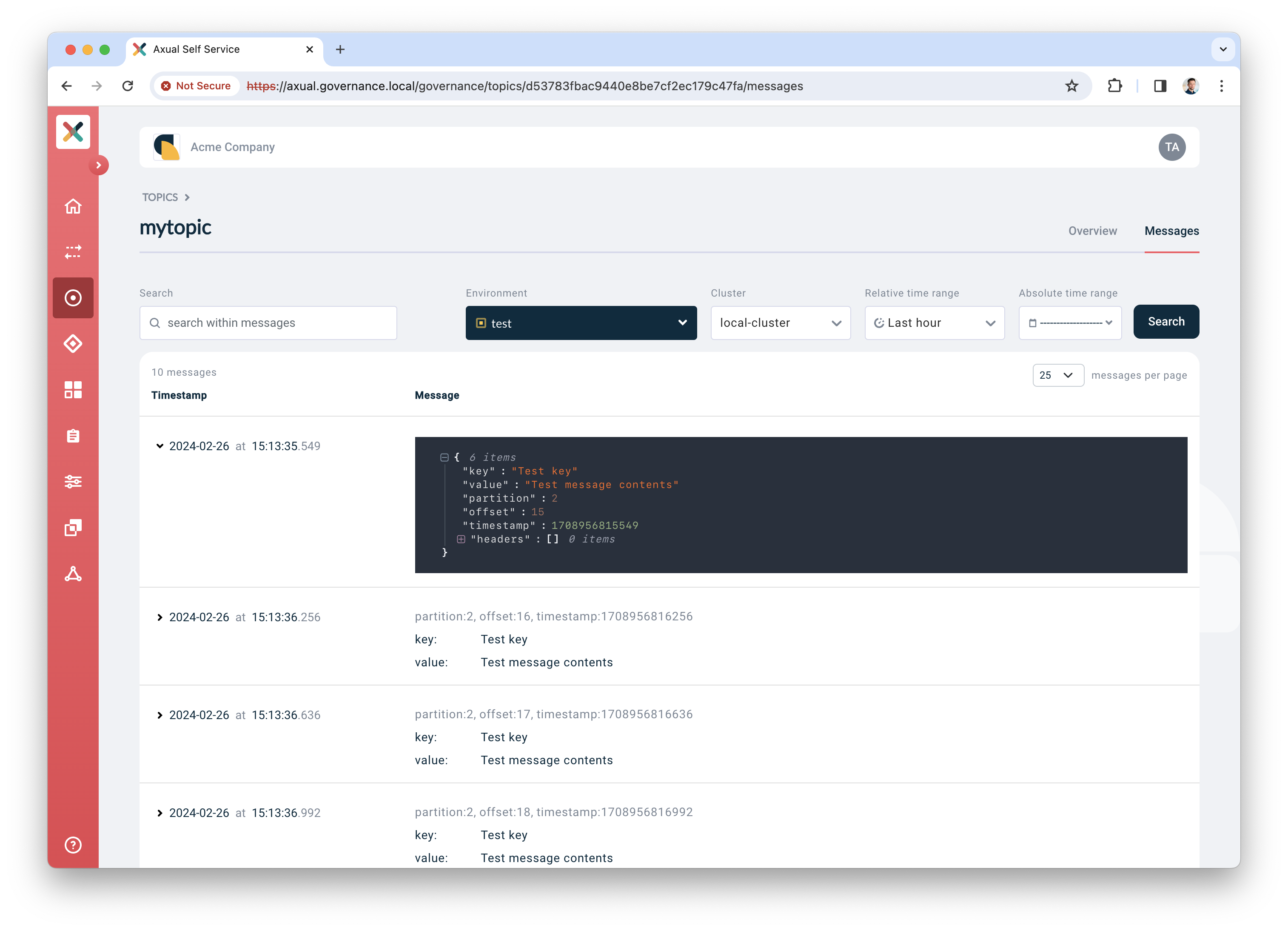

Verification

The last step in the verification process is done in Self-Service, by using topic browse & search.

-

Find the topic in Self-Service and navigate to the topic detail page

-

Click the "Messages" tab

-

Click "Search" to accept the default search options and browse for messages on the topic right away. If everything is configured correctly, the messages should become visible below the search controls.

click a row to expand it and show details for a given message

Conclusion

You have now concluded the first time setup of Self-Service for topic management on OpenShift and you have verified the integration between Axual Governance and your Kafka cluster.

Preparing for a production like setup

For a production like setup, you need to do more advanced configuration of the platform. This falls beyond the scope of this "How-To", but is explained in the Install the Axual Governance chapter.

Need support?

Please navigate to our Support portal and select Additional » Product trial questions to submit a support request specifically for your trial.