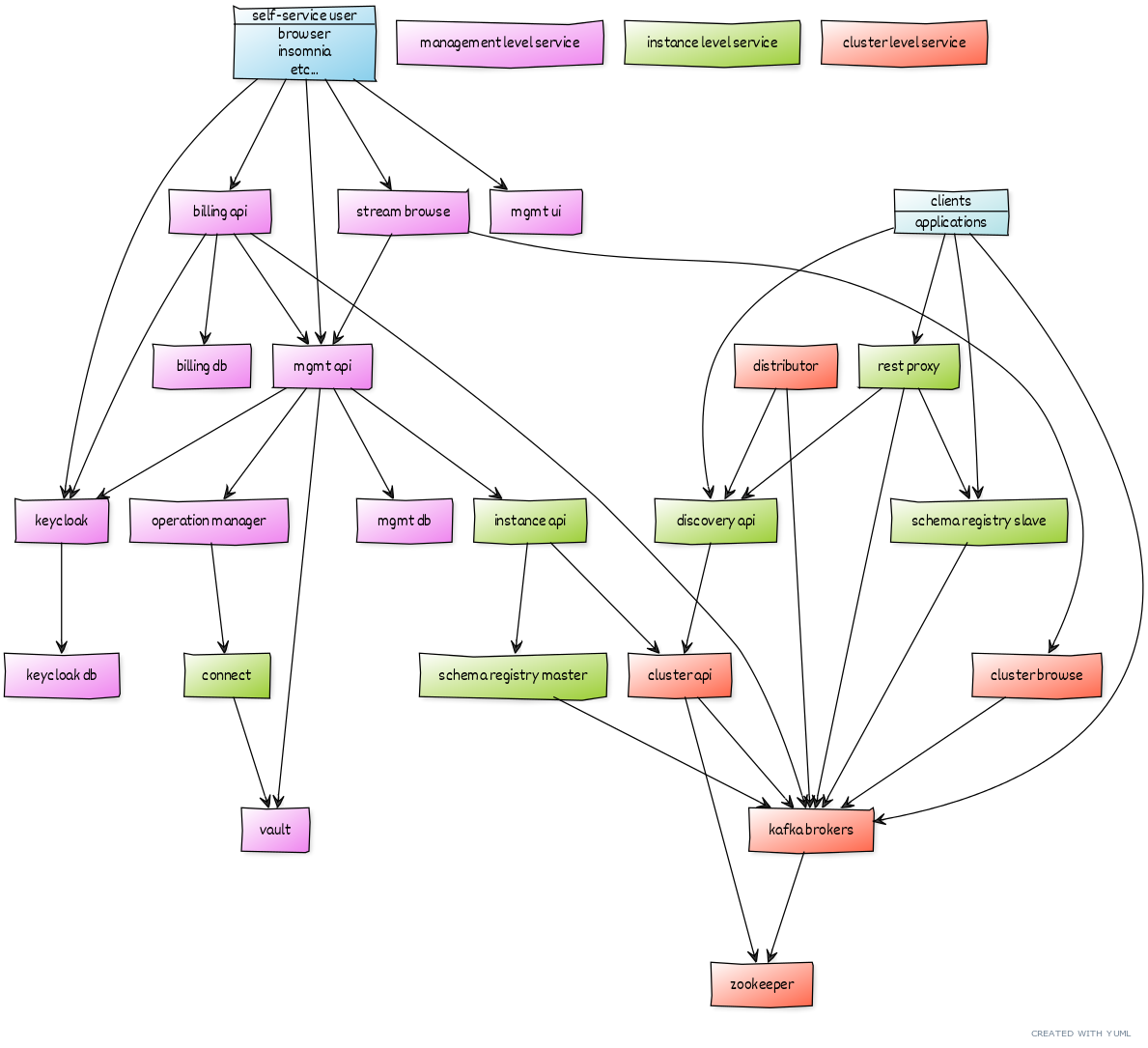

Axual Platform Components and Process Flow

Component Types

The Axual infrastructure is created with the goal of being able to have different clusters, tenants and instances (environments).

Likewise, our many of our microservices are built to support cluster or instances infrastructure.

We support different clusters to allow for failover and faster connections for different locations. Asian customers can connect to an Asian cluster and Europe to a cluster in Europe.

-

Tenant - a tenant is generally a self-standing organizational instance.

-

Instance - an Instance is generally an organizational environment. For example test, development and acceptance. Each instance has its own cluster setup.

-

Cluster - a cluster is a kafka install running at a location.

Our microservices can be split into three types:

-

Management Services - Services for which we have one running master service. We have one Platform Manager that connects to one database (cluster if wanted).

-

Instance Services - The management services communicate with the customer’s instance services. The task of the instance service is to run and synchronize commands across each of that instance’s cluster services. (For example the Management Service may tell the Instance Service to create a topic in Kafka. The instance service will forward that command to each cluster.)

-

Cluster Services - The task of the cluster services is to run specific commands inside of one cluster. (For example create one topic inside of one kafka install.)

-

Client Service - A client service is a Kafka producer or consumer client.

Axual Components

On the deployment page, the Axual components are listed and grouped by component type - Instance, Cluster, Client, and Management. On this page, we describe the function of each component and its usage inside the system process flow.

The goal of this page is to list the Axual modules and their function in the Axual Platform.

Kafka

The standard Kafka components are:

Broker

Kafka is run as a cluster of one or more servers that can span multiple data centers or cloud regions. Some of these servers form the storage layer, called the brokers. Other servers run Kafka Connect to continuously import and export data as event streams to integrate Kafka with existing systems such as relational (order, CRM, ERP) databases, IoT data, click streams as well as other Kafka clusters. To let customers implement mission-critical use cases, a Kafka cluster is highly scalable and fault-tolerant. If any of its components fail, others will take over their work to ensure continuous operations without data loss. (Cluster Service)

Zookeeper

ZooKeeper is used in distributed systems for service synchronization and for name registration. ZooKeeper is used in distributed systems for service synchronization and a naming registry. When working with Apache Kafka, ZooKeeper is primarily used to track the status of (broker) nodes in the Kafka cluster and maintain a list of Kafka topics and messages. (Cluster Service)

Connect

We use our version of Kafka connect. Connect is a standard Kafka component, but we have replaced this with a custom version of the tool. It’s used for data exchange to and from the cluster. (Client Service)

Schema Registry

We use our version of Schema Registry. Schema Registry is a standard Kafka component, but we have replaced this with our custom version of the tool. It’s used to store versions of schemas for each topic so consumers can decipher old and new messages as the schema’s change over time, for backward and forward compatibility of data exchange. (Instance Service)

User Interface

Platform UI

The management UI is a Web Application created in ReactJS. The Platform UI allows the user to browse and update their Kafka configuration. The UI also provides some features to provide the status of applications such as Stream Browse and the Connector Statuses. (Management Service)

Management DB

Almost all applications have a database. Our database is a MariaDB database. (Not in a cluster?) Almost all of the information from the Platform UI is stored in the Management DB. Only the Stream Browse, Connector Status and Connector Certificate stores are stored in other locations. (Management Service)

Programming Environment

Java Client

The Java client is a Java library that helps simplify programming in Kafka with authentication and cluster fail-over. (Client Service)

Java Proxy Client

The Java client runs with Axual specific programming methods. The Java Proxy is not as intuitive to use but uses the standard Kafka programming commands. This can be easier for a Kafka programmer to understand. It is also better to use in conjunction with Spring Cloud Streams. (Client Service)

Instance Services

Instance Shells around Kafka Components

Axual creates a shell around the Kafka components to translate from Axual commands to standard Kafka.

Cluster API

The Cluster API is basically a shell for some functionality around the Kafka broker. Most client requests - consume and produce will be made directly to the Kafka Brokers. The Cluster API creates, updates, and queries Kafka topics.

The Cluster API forwards API commands to Kafka commands. (Cluster Service)

Axual Connect

Axual Connect is a version of Kafka Connect wrapped in an Axual shell. Kafka Connect is a tool for reliably scaling and streaming data between Apache Kafka® and other data systems. It makes it simple to quickly define connectors that move large data sets into and out of Kafka. Kafka Connect can ingest entire databases or collect metrics from all your application servers into Kafka topics, making the data available for stream processing with low latency. An export connector can deliver data from Kafka topics into secondary indexes like Elasticsearch or batch systems such as Hadoop for offline analysis. (Client Service)

Vault

The vault stores client certificates and is accessed by Axual Connect to provide certificates to connect to the brokers.

Rest Proxy

Generally, we program kafka connections using one of the clients. The Rest Proxy provides a Rest API to connect to Kafka streams. The API can be used by simple IoT apps or old interfaces that need a Rest API endpoint. (Client Service)

Stream Browse

Stream browse is used, generally by the Platform UI to browse messages in a stream. (Cluster Service)

Strimzi

Strimzi operator is a tool made available under the Strimzi open source project, meant to simplify how Kafka is configured, deployed, and managed on Kubernetes. It allows developers to use familiar Kubernetes processes to setup Kafka without digging deep into the infrastructure’s technicalities.

With Strimzi, configuring Kafka in the Kubernetes environment is as simple as writing coded instructions, which are then executed by the underlying platform. Strimzi isn’t only about providing a user-friendly Kafka configuration environment but also built with fundamental security features. (Cluster Service)

KSML

KSML is a low code program (no programming, just configuration) for creating Kafka/Axual stream applications. (Client service)

Schema Registry

Schema Registry provides a serving layer for your metadata. It provides a RESTful interface for storing and retrieving your Avro®, JSON Schema, and Protobuf schemas. It stores a versioned history of all schemas based on a specified subject name strategy. It provides multiple compatibility settings and allows evolution of schemas according to the configured compatibility settings and expanded support for these schema types. It provides serializers that plug into Apache Kafka® clients that handle schema storage and retrieval for Kafka messages sent in any supported formats.

Schema Registry lives outside of and separately from your Kafka brokers. Your producers and consumers still talk to Kafka to publish and read data (messages) to topics. Concurrently, they can also talk to Schema Registry to send and retrieve schemas describing the messages' data models. (Instance Service)

Process Flow

The management UI connects to the Cluster API to create and maintain streams (and stream ACL’s (Access Control Lists - who is allowed to read/write what)). The management UI connects to the Connectors to issue run, edit and stop commands. The Platform UI gets most of its data from the Management DB.

Programming Clients connect to the Discovery Agent to find links to the active cluster. They then communicate directly to the brokers and schema registry, as a normal Kafka program does.