Using the CLI

Deploying Axual using Axual CLI

All services of Axual Platform have been dockerized. As for any generic Docker container, deployments poses some complexity on passing the correct environmental variables and network / persistence setup (port mapping, volume mapping). The Axual CLI (previously known as Platform Deploy) is designed to bootstrap the configuration for a particular setup, and deploy the platform as a whole. For any deployment scenario the following components are needed:

|

|

Setting up configuration

In order to perform a deployment:

-

a host needs to be prepared for deployment, meaning it needs a

NODENAME,CONFDIRand access to the Axual CLI, see Preparing a host for deployment -

configuration for all the Instance, Cluster and Client services needs to be prepared, see Configuring a Deployment

Preparing a host for deployment

Deployment using Axual CLI is done on a per-host basis. By default, it will look for a host.sh file, in the home directory of the user which is logged in. The file contains a unique name of the node within a cluster, NODENAME, as well as a reference to the configuration directory, CONFDIR used for deployment. How this configuration is done, is explained in Configuring a Deployment.

An example ~/host.sh file is shown below:

NODENAME=worker-1

CONFDIR="${HOME}/platform-configs"You can see in the example above that worker-1 is the unique name of this particular host. It is referred to in configuration files, that can be found in CONFDIR.

Configuring a Deployment

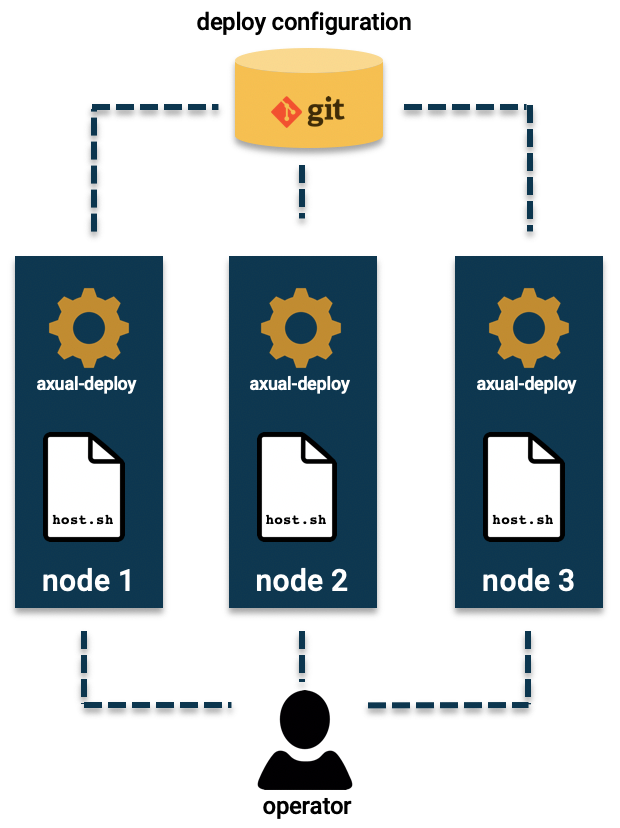

Assuming the deployment configuration of the Axual Platform setup for your organization is stored in a folder (backed by Git), configuration needs to be organized in directories for Clusters and Tenants

Clusters

This directory holds subdirectories for any Cluster in a particular setup. This means in a 2 cluster setup, there will be 2 subdirectories. Every cluster directory holds a single configuration file per cluster service (e.g. broker, distributor), as well as a nodes.sh file and a hosts file, for example:

clusters/

└── cluster_x

├── broker.sh

├── ca

│ ├── AxualDummyRootCA2018.cer

│ └── AxualRootCA2018.cer

├── cluster-api.sh

├── cluster-browse.sh

├── cluster-config.sh

├── configuration

│ ├── keycloak

│ └── alertmanager-config.yml

├── data.sh

├── discovery-api.sh

├── distributor.sh

├── exhibitor.sh

├── hosts

├── keycloak.sh

├── mgmt-alert-manager.sh

├── mgmt-api.sh

├── mgmt-db.sh

├── mgmt-grafana.sh

├── mgmt-populate-db.sh

├── mgmt-prometheus.sh

├── mgmt-ui.sh

├── monitoring-cadvisor.sh

├── monitoring-prometheus-node-exporter.sh

├── nodes.sh

├── operation-manager.sh

├── schemaregistry.sh

├── stream-browse.sh

└── vault.shCluster node configuration: nodes.sh

The nodes.sh file is an important file in any cluster setup. It holds information on which services are started on which nodes within a cluster, as well as other cluster-wide configuration. In the example below you see that in this 1 node cluster, there are 4 cluster services to be started, namely exhibitor, broker, cluster-api and distributor, 5 instance services and 3 management services. Services will be started in the order they are mentioned in this nodes.sh file.

# Name of the cluster

NAME="CLUSTER-FOO"

# Cluster, instance and management services for this cluster

NODE1_CLUSTER_SERVICES=worker-1:exhibitor,broker,cluster-api,distributor

NODE1_INSTANCE_SERVICES=worker-1:sr-slave,discovery-api,sr-master,instance-api,distribution

NODE1_MGMT_SERVICES=worker-1:prometheus,grafana,kafka-health-mono

# Please note INTERCLUSTER_ADVERTISED and APP_ADVERTISED are used for advertised url construction of broker only. All other components need to maintain their advertised urls separately.

NODE1_ADDRESS_MAP=PRIVATE:worker-1.replication:11.222.33.44,INTRACLUSTER:worker-1.internal:11.222.33.44,INTERCLUSTER_BINDING::11.222.33.44,INTERCLUSTER_ADVERTISED:broker-worker-1.company.com:,APP_BINDING::11.222.33.44,APP_ADVERTISED:broker-worker-1.company.com:

NODE1_BROKER_PORT_MAP=PRIVATE:9092,INTRACLUSTER:9094,INTERCLUSTER_BINDING:9095,INTERCLUSTER_ADVERTISED:9095,APP_BINDING:9093,APP_ADVERTISED:9093

# Monitoring configuration: the prometheus running on which cluster should scrape the JMX and prometheus endpoints of the components running on THIS cluster

MONITORING_CLUSTER="CLUSTER-FOO"

# Cluster level configuration: which monitoring services should run on EVERY node of this cluster

MONITORING_SERVICES="prometheus-node-exporter,cadvisor"

# Advanced configuration, used by Discovery API

NETWORK_PROVIDER="cloud-company"The formatting of the variable name for the other cluster nodes follows the following pattern:

NODE<NUMBER>_<CLUSTER|INSTANCE|MGMT>_SERVICES=<NODENAME>:<SERVICE_X>,<SERVICE_Y>,<SERVICE_Z>

Cluster service configuration: <service>.sh

Every cluster service has its configuration stored in a file matching the service name, ending with .sh. See below for an example (shortened) Cluster API configuration:

...

# PORTS #

#

# Port at which the web-server is hosted on the host machine

CLUSTERAPI_PORT=9080

# Port at which the web-server is advertised (if, for instance, the webserver is behind a load balancer or a proxy)

CLUSTERAPI_INTERCLUSTER_ADVERTISED_PORT=9080

# URL at which other components will find Cluster-API. Useful to use when behind a load balancer or a proxy.

CLUSTERAPI_INTERCLUSTER_ADVERTISED_HOST="somehost.company.com"

...Note that all configuration variables start with CLUSTERAPI_ and are all in uppercase.

Hosts configuration: hosts

The hosts file is used by some cluster services for the resolution of IP addresses assigned to those services. It is passed to every docker container with the --add-hosts option. See below an example hosts file:

broker-worker-1.company.com 11.222.33.44

clusterapi-worker-1.company.com 11.222.33.44

broker-worker-2.company.com 11.222.33.55

clusterapi-worker-2.company.com 11.222.33.55

instanceapi.company.com 11.222.33.44

schemas.company.com 11.222.33.44

discoveryapi.company.com 11.222.33.44

foo-worker-1.internal 11.222.33.44

foo-worker-1.replication 11.222.30.40

bar-worker-1.internal 11.222.33.55

bar-worker-1.replication 11.222.30.40Tenants

This directory holds subdirectories for any Tenant in a particar setup, as well as its Instances.

Within a tenant’s context, multiple instances might be defined. For every instance, a directory can be found under the tenant subdirectory.

Every instance directory holds a single configuration file per instance service (e.g. Instance API, Discovery API), as well as an instance-config.sh file, for example:

company/

├── instances

│ └── OTA

│ ├── axual-connect.sh

│ ├── ca

│ │ ├── AxualDummyRootCA2018.cer

│ │ ├── AxualRootCA2018.cer

│ │ └── DigiCert-High-Assurance-EV-Root-CA.cer

│ ├── discovery-api.sh

│ ├── distribution.sh

│ ├── instance-api.sh

│ ├── instance-config.sh

│ ├── rest-proxy.sh

│ └── schemaregistry.sh

└── tenant-config.shTenant configuration: tenant-config.sh

The tenant-config.sh file contains 2 configuration variables that are deprecated, and will be deleted in the future.

# Non-functional variable. It exists because platform-deploy has a 'get_tenant_name' function.

# It may be used in the future for logging purposes, but at the moment it's completely unused.

NAME="demo"

# Needed by Discovery API for compatibility with older client-library versions

# The /v2 endpoint returns the system name in the response body.

SYSTEM="demo-system"Instance configuration: instance-config.sh

The instance-config.sh contains configuration settings that apply to all instance services.

# Note: the following properties apply to all instance-services in this instance, as well as all docker containers running on the clusters this instance spans

# The name of this instance. It's used for:

# - naming distribution connect-jobs

# - part of the name of every topic owned by this instance,

# for brokers whose cluster-api use the instance name in the topic naming convention

# - discovery api uses it as "discovery.environment" property

NAME="OTA"

# The name of the cluster which runs management services, like Prometheus, Management API and Management UI

# that should be managing this instance.

MANAGEMENT_CLUSTER="management-cluster-name"

# The name of the cluster which sund client services, like Connect, which should run as part of this instande.

CLIENT_CLUSTER="client-cluster-name"Instance service configuration: <service>.sh

Every cluster service has its configuration stored in a file matching the service name, ending with .sh. See below for an example (shortened) Discovery API configuration:

...

# Discovery API is behind an instance-load-balancer.

# This is the HOSTNAME (no protocol / port) of theSelf-Se load balancer.

# This load balancer should do just forwarding, the Discovery API itself handles SSL

DISCOVERYAPI_ADVERTISED_HOST="11.222.33.44"

# The value for the "server.ssl.enabled-protocols" config in the discovery-api.

# If this property is missing or empty, the default is "TLSv1.2"

# There was no documentation to back up prefixing protocols with the plus sign, but

# practice shows it's needed in order to support all of them at once.

DISCOVERYAPI_SERVER_SSL_PROTOCOLS="+TLSv1.1,+TLSv1.2"

# PORTS #

# Instance-level defined ports are comma separated pairs of "cluster-name,port"

# The port at which the discovery API load balancers are available per cluster, be it SSL or not.

DISCOVERYAPI_CLUSTER_ADVERTISED_PORT=discoveryapi.company.com:443

...Note that all configuration variables start with DISCOVERYAPI_ and are in uppercase.

Using Axual CLI

For every new version of the platform a new Axual CLI is released, as can be seen in the release notes. This is because depending on what functionality has been introduced, deployment functionality or configuration options might have changed as well.

axual.sh reference

Usage:

./axual.sh [OPTIONS] COMMAND [ARG…]

Options

Option |

Description |

Example |

|---|---|---|

|

Verbose. Log all underlying docker commands and shell functions |

|

Available commands

Command |

Description |

Example |

|---|---|---|

|

start all cluster / instance / mgmt / client level services that should be running on this node. |

|

|

stop all cluster / instance / mgmt / client level services which are running on this machine. |

|

|

restart all cluster / instance / mgmt / client level services which are running on this machine. |

|

|

clean data, containers or images used in an Axual deployment |

|

|

send the change status command to all instances of the cluster, and getting current status of all instances of the current cluster |

|

|

send the change status command to specified instance of the cluster, and getting current status of specified instance of the current cluster |

|

start, restart and stop

Use ./axual.sh [start|restart|stop] to start, restart or stop (a selection of) instance / cluster / mgmt / client level components. This is always relative to the node this command is ran on.

Usages:

-

./axual.sh [start|restart|stop] [instance] [<instance-name>] [<servicename>] -

./axual.sh [start|restart|stop] [cluster] [<cluster-name>] [<servicename>] -

./axual.sh [start|restart|stop] [mgmt] [<servicename>] -

./axual.sh [start|restart|stop] [client] [<instance-name>][<servicename>]

instance

Use ./axual.sh instance <instance-name> to get a specified instance status.

Usage:

`./axual.sh instance <instance-name> set status [metadata|data|offset|app] [on|off]`Use ./axual.sh instance <instance-name> to set a specified instance status.

Usage:

`./axual.sh instance <instance-name> get status`cluster

Use ./axual.sh cluster to get state of all the instances in the cluster.

Usage:

./axual.sh cluster get statusUse ./axual.sh cluster to set state of all the instances in the cluster.

Usage:

./axual.sh cluster set status [metadata|data|offset|app] [on|off]The following statuses can be returned:

-

INACTIVE: Not participating actively in the instance. -

READY_FOR_METADATA: Cluster is active and ready to apply topics if necessary. -

READY_FOR_DATA: All metadata on the cluster is up to date and the cluster is ready to receive data from other clusters through Distributor. -

READY_FOR_OFFSETS: Cluster is now ready to receive any unsynchronized consumer offsets. -

READY_FOR_APPLICATIONS: The cluster is fully synched with other clusters in the same instance and is ready to serve client applications.

|

Example usage

when referring to an instance, always use the full instance name, including <tenant>, e.g. for tenant company, instance prod, the full instance name would be company-prod

|

|

Start |

|

Stop all |

|

Restart |

|

Start |

|

Restart |

|

Stop |

|

Start |

|

Restart |

|

Stop all |

|

Get the current status of tenant |

|

Restart |

|

Stop |

|

Start |