Connect: integating Axual with external systems

Overview

Kafka Connect is a tool for scalable and reliably streaming data between Apache Kafka and other systems.

It makes it simple to quickly define connectors that move large collections of data into and out of Kafka. Kafka Connect can ingest entire databases or collect metrics from all your application servers into Kafka topics, making the data available for stream processing with low latency. An export job can deliver data from Kafka topics into secondary storage and query systems or into batch systems for offline analysis.

Connect

We offer this functionality under the name Connect, a service wrapper around Kafka Connect - https://kafka.apache.org/documentation/#connect

It uses the Switching and Resolving capabilities of Axual Client Proxy, and three topics for connector configuration, offset and status storage to extend the basic Kafka Connect functionality to support multiple clusters, tenants and environments.

The service can run on multiple machines for the same Axual Instance, forming a Connect Cluster.

Use Kafka Connect to Import/Export Data

You’ll probably want to use data from other sources or export data from Kafka to other systems, Instead of writing custom integration code you can use Connect to import or export data.

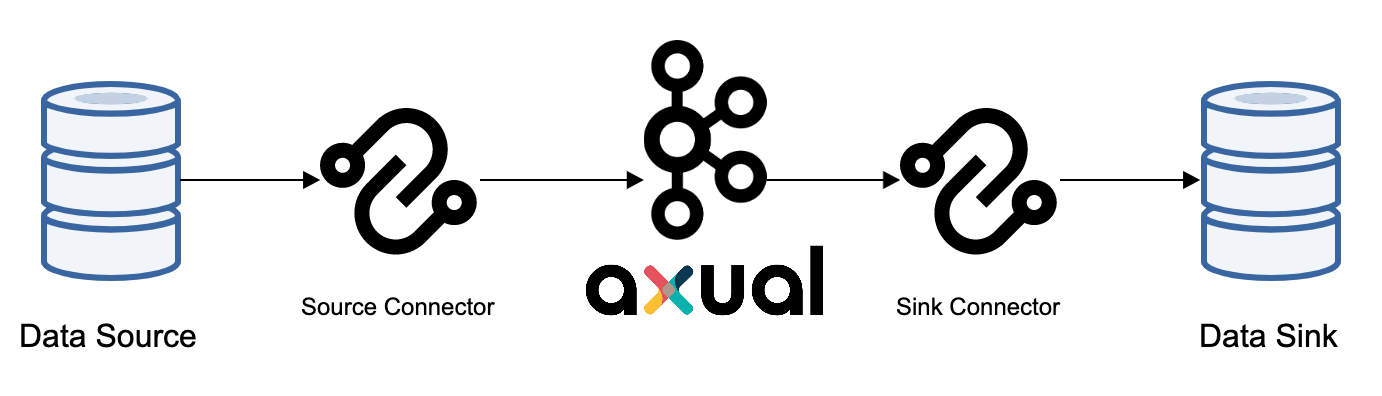

To copy data between Kafka and another system, we use Connector for the system they want to pull data from or push data to.

Connectors come in two flavors:

-

SourceConnectorsto import data from another system to Kafka (e.g. JDBCSourceConnector would import a relational database into Kafka). -

SinkConnectorsto export data from Kafka to another system (e.g. JDBCSinkConnector would export the contents of a Kafka topic to a relational database).

|

Known Limitations

|